티스토리 뷰

MAT: Mask-Aware Transformer for Large Hole Image Inpainting

상솜공방 2023. 10. 24. 10:59

convolutional head

input data: incompleted image Im and given mask M

to extract tokens

one: change the input dimension

three: down sample the resolution(1/8 size)

numbers of convolution channels and FC dimensions to 180 for the head, body, and reconstruction modules.

reason of this module

1. it is designed for fast downsampling to reduce computational complexity and memory cost.

2. empirically find this design is better than the linear projection head used in ViT, as validated in the supplementary material.

3. the incorporation of local inductive priors in early visual processing remains vital for better representation and optimizability.

transformer body

five stages of transformer blocks at varying resolutions (with different numbers of tokens)

long-range interactions via the proposed multi-head contextual attention (MCA)

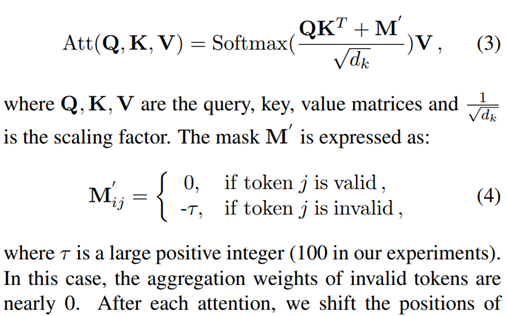

◀ 도표 설명

TB: adjusted transformer block

MCA: proposed multi-head contextual attention

Normal box: valid token

X box: nvalid token

The blue arrow: indicates the output of attention is computed as the weighted sum of valid tokens (indicated by blue arrows) while ignoring invalid tokens.

◀ 기존의 트랜스포머 모듈과 다른 점

1. 중간에 있는 Layer Norm을 삭제

we observe unstable optimization using the general block when handling large-scale masks, sometimes incurring gradient exploding. The reason is the large ratio of invalid tokens (their values are nearly zero). In this circumstance, layer normalization may magnify useless tokens overwhelmingly, leading to unstable training.

2. 패치 값을 더하지 않고 Concat 함

Residual learning generally encourages the model to learn high-frequency contents. However, considering most tokens are invalid at the beginning, it is difficult to directly learn high-frequency details without proper low-frequency basis in GAN training, which makes the optimization harder. Replacing such residual learning with concatenation leads to obviously superior results.

3. 어텐션과 MLP 모듈 사이에 FC 레이어가 들어감

4. 4개의 TB 이후 Conv를 통과시킨 뒤 잔차 연결

3x3 Conv가 포지션 임베딩을 대신한다. 나는 지역적인 어텐션을 함께 넣을 것이므로 참조 논문을 읽고 공부를 해야겠다. (참조 논문: 59, 62)

만약 포지션 임베딩을 하겠다고 하면, relative position matirx인 B를 더해주는 방법을 취하자.(Swin transformer에서 사용된 방법이다.)

To handle a large number of tokens (up to 4096 tokens for 512 × 512 images) and low fidelity in the given tokens (at most 90% tokens are useless), our attention module exploits shifted windows [36] and a dynamical mask. all tokens in a window are updated to be valid after attention as long as there is at least one valid token before. If all tokens in a window are invalid, they remain invalid after attention.

Figure 4. Toy example of mask updating. The feature map is initially partitioned into 2 × 2 windows (in orange). “U” means a mask updating after attention and “S” indicates the window shift. For images dominated by missing regions, the default attention strategy not only fails to borrow visible information to inpaint the holes, but also undermines the effective valid pixels. To reduce color discrepancy or blurriness, we propose to only involve valid tokens (selected by a dynamic mask) for computing relations.

The block numbers and window sizes of 5-level transformer groups are {2, 3, 4, 3, 2} and {8, 16, 16, 16, 8}

convolutional tail

convolution-based reconstruction module is adopted to upsample the spatial resolution to the input size.

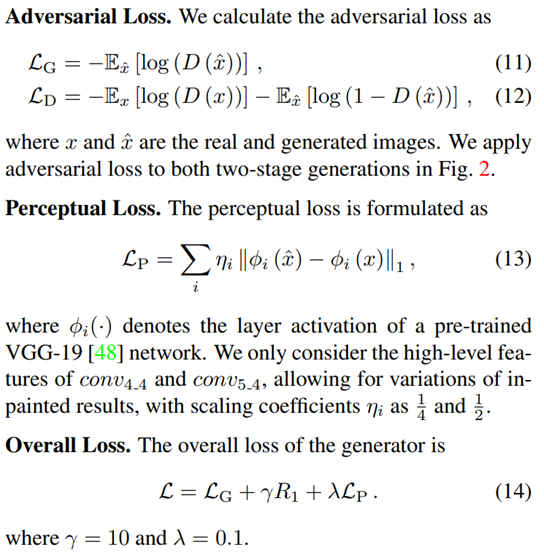

Loss Function

To improve the quality and diversity of the generation, we adopt the non-saturating adversarial loss [16] for both two stages to optimize our framework, regardless of the pixel-wise MAE or MSE loss that usually leads to averaged blurry results. We also use the R1 regularization [39, 46], written as R1 = Ex ∥∇D(x)∥. Besides, we adopt the perceptual loss [24] with an empirically low coefficient since we notice it enables easier optimization.

'논문 분석 > 2D 컴퓨터 비전' 카테고리의 다른 글

| Addressing unfamiliar ship type recognition (0) | 2025.03.19 |

|---|---|

| Swin Transformer - Hierarchical Vision Transformer using Shifted Windows (0) | 2024.01.12 |

| Two-stage ViT based ship image inpainting (0) | 2023.11.04 |

| An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale (0) | 2023.09.29 |